45. Compound AI systems

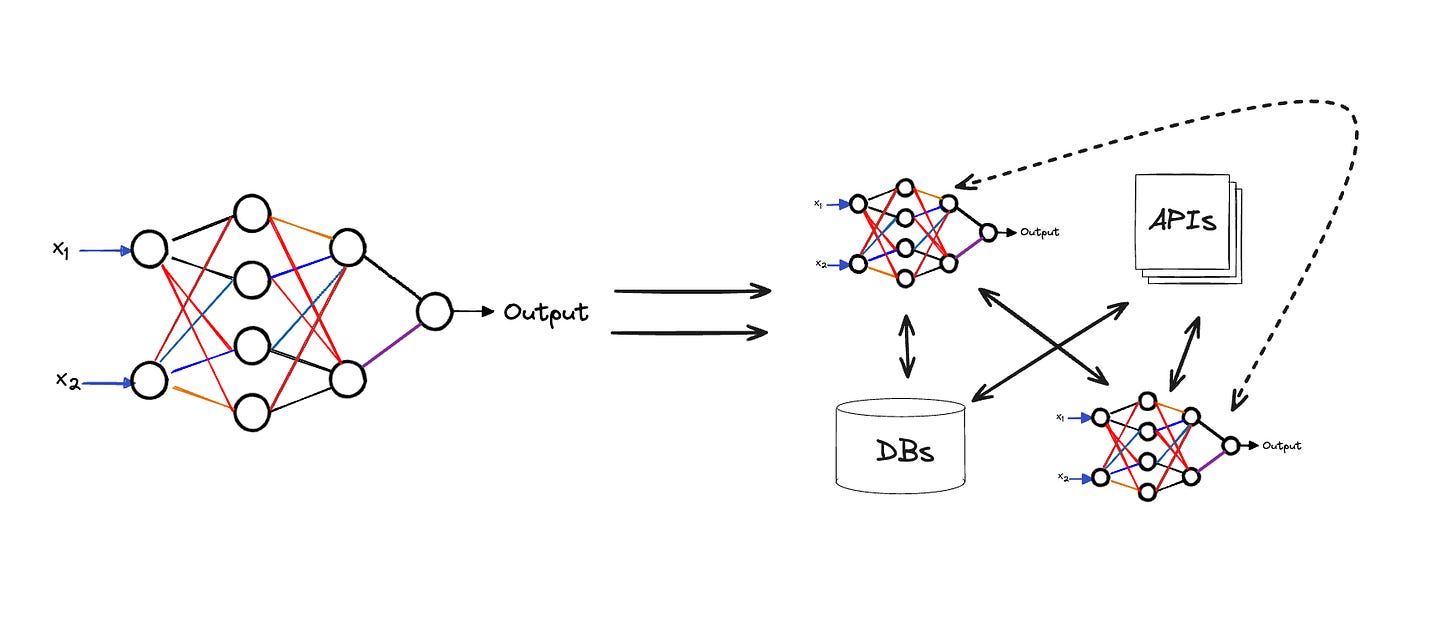

State-of-the-art AI results are increasingly obtained by compound systems with multiple components, not just monolithic models.

Introduction

State-of-the-art AI results are increasingly obtained by compound systems with multiple components, not just monolithic models.

A few numbers:

60% of LLM applications use some form of RAG

30% use multi step chains

A compound AI system is a system that tackles AI tasks using multiple interacting components, including multiple calls to models, retrievers, or external tools.

In contrast, an AI model is simply a statistical model, e.g., a Transformer that predicts the next token in text.

Increasingly more and more state-of-the-art results are obtained using compound systems. Why is that?

Iterating on a system design is much faster than waiting for the training to finish.

Tasks are easier to improve with system design: for example, suppose that the current best LLM can solve coding contest problems 30% of the time, and tripling its training budget would increase this to 35%; this is still not reliable enough to win a coding contest! In contrast, engineering a system that samples from the model multiple times, tests each sample, etc. might increase performance to 80% with today’s models.

Machine learning models are inherently limited because they are trained on static datasets, so their “knowledge” is fixed. Therefore, ML engineers need to combine models with other components, such as search and retrieval, to incorporate timely data.

Neural network models alone are hard to control: while training will influence them, it is nearly impossible to guarantee that a model will avoid certain behaviours. Using an AI system instead of a model can help ML engineers control behaviour more tightly.

Developing compound AI systems

However, designing such systems has its new share of challenges.

Design Space

The range of possible system designs for a given task is vast. For example, even in the simple case of retrieval-augmented generation (RAG) with a retriever and language model, there are:

Many retrieval and language models to choose from

Other techniques to improve retrieval quality, such as query expansion or reranking models.

Techniques to improve the LLM’s generated output (e.g., running another LLM to check that the output relates to the retrieved passages)

In addition, ML engineers need to allocate limited resources, like latency and cost budgets, among the system components. For example, if you want to answer RAG questions in 100 milliseconds, should you budget to spend 20 ms on the retriever and 80 on the LLM, or the other way around?

Optimization

Often in ML, maximizing the quality of a compound system requires co-optimizing the components to work well together.

In single model development a la PyTorch, engineers can easily optimize a model end-to-end because the whole model is differentiable.

However, compound AI systems contain non-differentiable components like search engines or code interpreters, and thus require new methods of optimization.

Optimizing these compound AI systems is still a new research area; for example, DSPy offers a general optimizer for pipelines of pretrained LLMs.

Operations

Machine learning operations (MLOps) become more challenging for compound AI systems. For example, while it is easy to track success rates for a traditional ML model like a spam classifier, how should ML engineers track and debug the performance of an LLM agent for the same task, which might use a variable number of “reflection” steps or external API calls to classify a message?

Conclusions

There are some emerging paradigms in the LLMOps space. Many engineers are stichting together different AI stacks:

Agents are LLMs interacting with each other

RAG is a stack of information retrieval applications interacting with LLMs

Langchain/Llamaindex give you tool for controlling outputs with chain of thoughts, self consistency, etc.

Are you using any of these tools in your ML stack at your current job?

Let me know in the comments!

Ludo